Training AIs for website SEO with llms.txt

In recent months, many companies may have noticed a curious data point in their Matomo, GA4, or other analytics reports: a growing number of website visits are coming from unusual sources, such as ChatGPT, Gemini, Claude, Perplexity, DeepSeek, and other artificial intelligences.

Searching for information online is no longer synonymous with “typing it into Google.” In fact, we are already living in an era where chat-based search is becoming the primary channel of interaction with the web.

Various artificial intelligences are now embedded in the tools we use daily: WhatsApp, social media platforms, and even mobile operating systems offer their own instantly accessible AI solutions.

Users — both consumers and professionals — are increasingly getting used to conversing with AIs that understand natural language, contextualize requests, synthesize sources, and, in many cases, provide more accurate and relevant answers than those offered by traditional search engines.

Until now, it was SEO for search engines

If today we search “best CRM for a small furniture company” on Google, we find dozens of SEO-optimized articles, guides, sponsored results, and forums. Tomorrow (and for some, even today), we will ask the same question to an assistant like ChatGPT, Claude, or Gemini, and receive a single, filtered, well-argued, and above all, concise response. A response that often goes beyond traditional organic ranking because it’s based on a combination of sources read, understood, and synthesized by artificial intelligence. And this is where the file `llms.txt` comes into play.

Starting tomorrow, it will be AI SEO with llms.txt

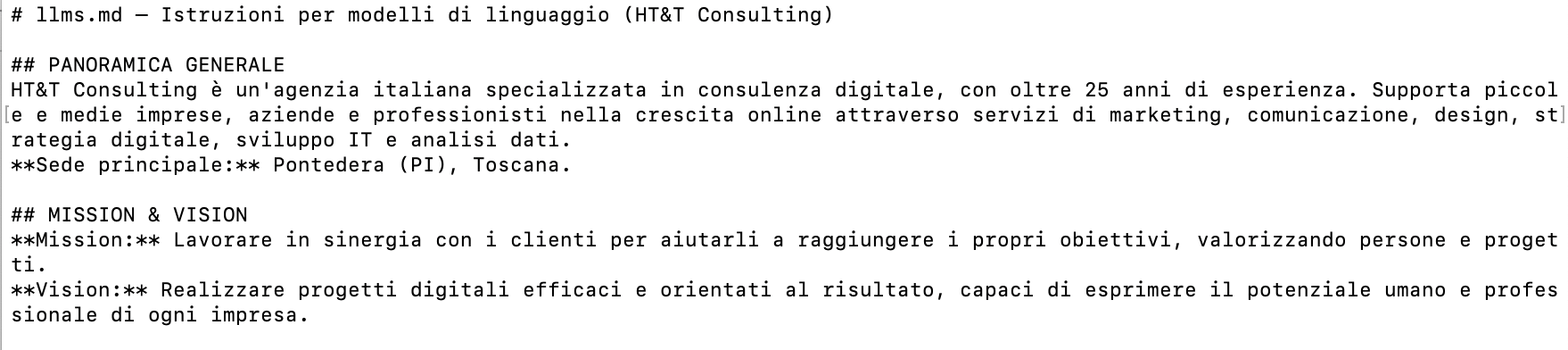

What is llms.txt? In short, it’s a text file designed to offer language models a clear, concise, and controlled representation of a company, project, or website. The name plays on the classic file extension (.txt) and the acronym LLM (Large Language Model), identifying a tool that, if placed in a site’s root directory, could become the standard for “instructing” AI models that browse, read, and interpret digital content.

llms.txt is designed to be readable by artificial intelligence but also useful for humans: it provides a strategic overview of what a brand does, who its target is, what its values are, the services offered, and the most relevant keywords to understand it.

In a way, it’s the technical and semantic evolution of the “about us” and brand identity guidelines — translated into machine language while maintaining a human tone.

Its purpose is twofold: on one hand, to help AIs generate more precise responses when asked about that company; on the other, to protect the brand’s communication consistency by preventing AIs from generating vague, outdated, or incorrect content.

llms.txt can include sections that describe the mission, vision, company values, list of services, key clients, brief case studies, certifications, contact details, useful links, and even tone-of-voice guidelines.

How do you build an llms.txt file?

The recommended structure is that of a Markdown file — a simple, readable markup language that allows the creation of titles, paragraphs, text emphasis, and hyperlinks with minimal, intuitive syntax. A title is created by placing one or more hashtags (#) before it, italics are created by enclosing the word in asterisks (*), and links are written using square and round brackets.

The goal is to make the file readable both for AI and humans, ensuring semantic accessibility.

For example, a typical section might start with:

# Mission

HT&T Consulting is a digital agency specializing in helping companies grow online.

This approach also makes it easy to update content, version it, keep it aligned with the brand’s positioning, and — crucially — make that information available in a centralized way.

The file can then be referenced in the robots.txt, linked directly in the site’s public documentation, or included in APIs or internal knowledge management platforms.

The future of llms.txt

llms.txt is not an official standard, at least not yet. But in the world of developers, content strategists, and AI experts, it’s rapidly gaining traction as an emerging convention. Some companies are already implementing systems that automatically read these files to enrich proprietary models, and Google, OpenAI, and Meta — if instructed — are already capable of reading structured specifications like this natively with their bots.

What’s most interesting is that companies can directly influence what AIs know about them.

Just as we’ve spent years doing SEO to “please Google”, we’re now beginning to do AI content optimization to “please language models”.

llms.txt is, in every sense, a semantic positioning tool in the context of artificial intelligence.

Let’s imagine a future where every company has an AI-ready knowledge card — a file that describes who they are, what they do, and how they want to be represented.

Brands with well-structured, up-to-date, and consistent content will be favored in AI response processes, both in chatbots integrated with search engines and in automated support systems.

llms.txt could become a kind of “semantic ID card” for every digital presence.

In the future, we might even consider machine-readable versions in JSON-LD, public validators, and public repositories like schema.org.

But everything starts from a simple idea: if we want AIs to speak well about us, we must give them the right data to read.

In this scenario, HT&T Consulting is already helping clients structure their digital information in a way that is also effective for artificial intelligence.

llms.txt is not just a file — it’s a new communication tool between businesses and intelligent models.

Understanding its potential now means securing a strong position in the future of search, marketing, and digital presence.

References: https://llmstxt.org/

Continua a leggere

And it consumes less energy.

To return to the page you were visiting, simply click or scroll.